Supercharge Your Data Pipeline: 10 Game‑Changing Tricks

10 Smart Ways to Keep Your Data Pipeline Running Like a Well‑Tuned Machine

In the wild world of data, a smooth pipeline is the secret sauce that turns raw numbers into actionable gold. Below are ten playful yet practical techniques that will push your data stream from sluggish to spry.

1. Automate Your Way to Effortless Efficiency

- Let automation handle the housekeeping—schedule regular data fetches, trigger transformations, and set up alerting for anomalies.

- Bring the “bot‑herding” mindset: fewer manual clicks mean a lower chance of errors and more time for coffee.

2. Cloud‑First: Let the Sky Upgrade You

- Leverage elastic compute resources; scale up when you have a data surge, scale down when the coffee breaks are over.

- Use managed services to offload infrastructure headaches—think of it as hiring a personal assistant for your data.

3. Gather and Consume Data Wisely

- Choose aggregation tools that cloister your data into bite‑size packets—moving less material saves bandwidth.

- Filter out the noise before you ship it; the less chatter, the faster your pipeline.

4. Paint a Clean Canvas

- Implement data cleansing routines: deduplicate, correct typos, and normalize formats.

- Clean data is like freshly washed dishes—looks great and works better.

5. Keep a Weather‑Shedding Eye on Quality

- Spot anomalies with real‑time dashboards; treat them as weather alerts—addressed promptly, they stay harmless.

- Use metric thresholds to catch outliers before they become messes.

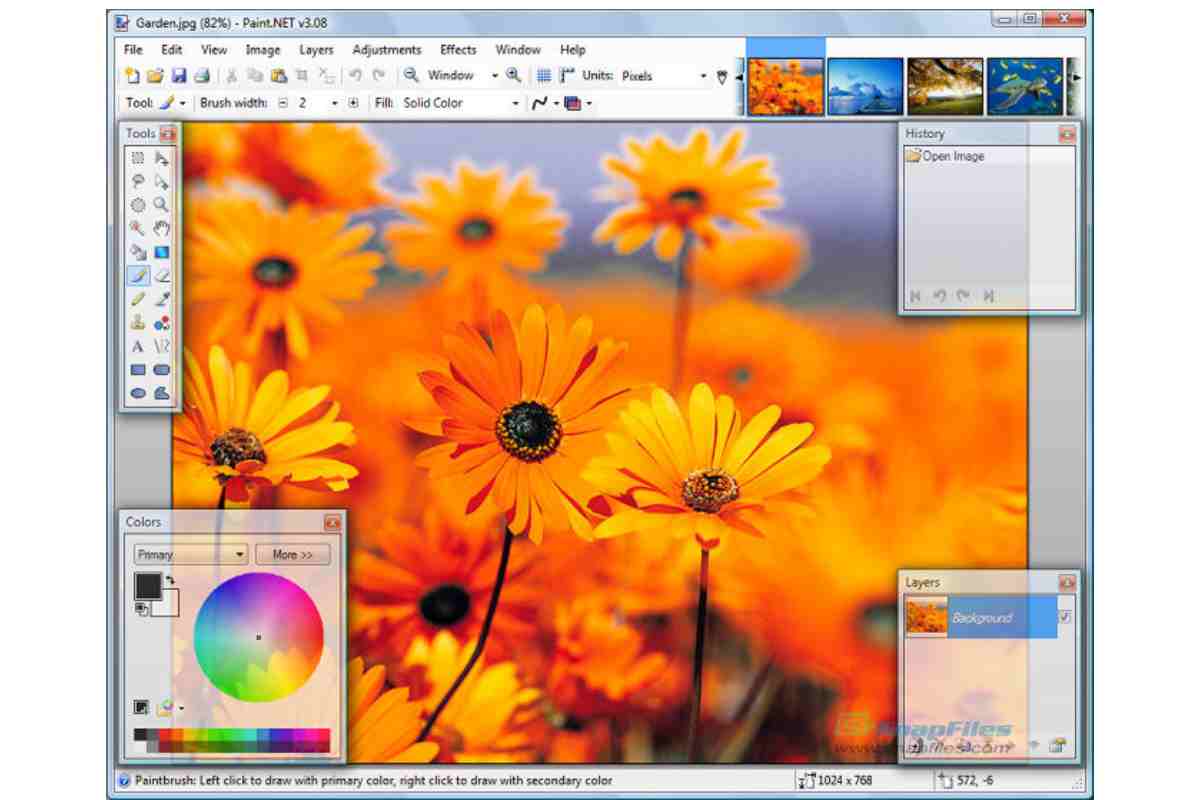

6. Turn Numbers into Stories

- Employ visualization tools to make patterns pop—graphs are easier to read than raw logs.

- A picture is worth a thousand data points.

7. Lock the Gates: Data Security Is No Joke

- Encrypt data at rest and in transit; think of it as a vault for your most valuable insights.

- Define permissions that let only the right hands (and hearts) touch the river of information.

8. Hug Your Performance Metrics

- Benchmark throughput and latency; then brag when you beat the numbers.

- Fine‑tune indexes and queries to shave milliseconds off response times.

9. Roll with the River of Change

- Adopt CI/CD pipelines for data rollouts—less surprise, more sprint.

- Stay agile: adjust data schemas as your business universe expands.

10. Continuous Optimization: Because ‘Good Enough’ Is Not Enough

- Set up a continuous loop: collect feedback, tweak, retest—like a Chef refining a recipe.

- Celebrate wins, learn from stalls, and always aim for smoother flow.

Final Thoughts

Think of your data pipeline as a long, winding river. With the right tools, a dash of automation, and a sprinkle of humor, you can keep it flowing smoothly, delivering fresh, clean, and secure data to power your decisions. Happy plumbing—may your data always run hot!

1. Automate Wherever Possible

Streamline Your Data Workflow: Why Automation Rocks

Picture your data pipeline as a relay race where the baton is a bunch of raw numbers and snippets. If you hand that baton to a tired runner (you), the whole team slows down and the chances of slipping increase.

Let’s be honest: manual data chores are a well‑known time killer and money pocket‑miser. Automation steps in like a super‑charged teammate, handing you the baton faster and with fewer wobbling moments.

Here’s Why You Should Automate

- Time and Cash Saver: Your hands are busy—why waste them on repetitive tasks when a script can do it in seconds?

- Fresh Energy for Big Ideas: Free up mental bandwidth to dive into deeper analysis instead of juggling spreadsheets.

- No Human Slip‑Ups: Auto‑runs are a reliable crew—no typo, no misplaced comma, no dropped data.

- Speed to Action: Results hit the desk quicker, so you can pivot faster when trends change.

Spot the Automation Gold

Here are a few ops where a quick automation win could make your life easier.

- Data Integration: Pull all those sources together automatically, no manual copy‑paste nightmares.

- ETL (Extract, Transform, Load): Fire up pipelines that fetch, tidy, and stash the data smooth as butter.

- Data Cleaning: Auto‑remedy duplicates, out‑of‑range values, or shaozy formatting with a single command.

So next time you’re staring at a mountain of raw numbers, ask yourself: “Can I Automate this?” Chances are the answer is yes, and your pipeline will thank you.

2. Leverage Cloud Computing Resources

Why Cloud Computing Is a Game‑Changer for Your Data Pipeline

Think of your data pipeline as a busy highway—traffic keeps coming, you’re always looking for a new lane, and you wouldn’t want to break the whole system just to add a little more speed. Cloud computing gives you that extra lane without tearing down your entire infrastructure.

Key Benefits in a Nutshell

- Instant Scalability – ramp up or down with a click (no hard drive wars).

- No Upfront Costs – pay only for what you’ll actually use.

- Flexibility – tweak your pipelines on the fly to adapt to new business needs.

- Speed & Reliability – big players like AWS and Azure run on redundant, globally‑distributed data centers.

- Focus on Innovation – your team can invest more time into data science, less into server maintenance.

Getting Started Made Easy

Want to start? Pick a provider that suits your stack:

- Amazon Web Services (AWS) – go auto‑scaling and find a billing plan that matches your budget.

- Microsoft Azure – great if you already use Office or .NET; just integrate the Azure Data Factory.

- Google Cloud Platform – best for machine‑learning heavy workloads; try its BigQuery for lightning‑fast analytics.

Final Thought

Switching to the cloud is like upgrading to a self‑driving car. You’ll spend less time worrying about “hardware maintenance” and can focus on getting the most out of your data. So buckle up, scale responsibly, and let the cloud power your next big breakthrough!

3. Utilize Data Aggregation Tools

Crunching Numbers One Click at a Time

Ever wished you could scoop up data from all the chaos around you, stash it neatly, and then sip a coffee while the trends flow to your screen? That’s exactly what a good data aggregation tool does.

Why the Shortcut Matters

- Time‑Saver: You can ditch the endless copy‑paste routine and let the software do the heavy lifting.

- Pattern‑Parade: With everything in one spot, spotting spikes, dips, or sudden anomalies turns from detective work into a quick snapshot.

- Personalized Pipeline: Pick tools that fit your workflow, not a one‑size‑fits‑all buffet. The right fit lets you squeeze every drop of insight from the data.

Play the Numbers Game

Think of your data sources as a lineup of contestants. A great aggregation tool hands them their favorite stat‑cards, lines them up, and lets you scroll through the results like a high‑energy sports broadcaster. If you’re not sure which tool is up for the job, keep those requirements on the table and let the software do the matchmaking.

4. Keep Your Data Clean

Why Keep Your Data on the Straight and Narrow

Think of data like a fresh batch of fruit: any little bruise or mold can ruin the whole fruit bowl. Whether you’re crunching numbers for a board meeting or just figuring out the best pizza topping, clean data is the secret sauce that turns raw juice into a real, tasty analysis. Skip it and you’ll end up with a recipe that’s all over the place.

What’s the Big Deal?

Cleaning your data is similar to a spring cleaning spree:

- Spotting Annoying Errors – those typos, mismatched fields, or double‑entries that sneak in like uninvited guests.

- Removing Skewed Clues – outliers that might push your results up or down like a ride‑share driver taking the wrong route.

- Ensuring Consistency – making sure every value follows the same rules so your analysis stays honest.

Why Get It Regularly?

Data isn’t a static thing. Small changes can ripple like a stone slapped into a pond, fundamentally altering what you’re seeing. If you’re relying on the numbers, it’s best to tidy them up on a routine basis, so the reports you deliver always deserve the confidence that comes from being crystal clear.

Feel the Difference

Cleaning data isn’t just a tedious chore; it’s the moment when the numbers don’t fight back, and your conclusions become accurate, trustworthy, and a little less stressful. So keep your data clean, keep your sanity intact.

5. Monitor Your Data Quality

Keeping Your Data in Check

Picture this: your data pipeline is a sleek, high‑speed train. If you ignore the quality of the tracks, the train will rattle, derail, or worse—embark on a belly‑flop. That’s why watching your data’s health is absolutely essential.

Spotting Trouble Before the Crash

- Performance Changes: Got a sudden drop in speed or accuracy? It’s time to pause the ride and inspect.

- Accuracy Alerts: Flaky numbers can lead to wrong decisions—catch them early!

- Bias Detection: Even a tiny skew can distort outcomes. Stay sharp so your results stay fair.

Quick Fixes, Easy Wins

When you spot a glitch, grab your tools and jump into corrective action. The sooner you address it, the less it hurts the whole system.

Remember—perfect data is a myth. Continuous monitoring is the clear win.

6. Use Data Visualization

Making Sense of Data: One Chart at a Time

Ever feel like your data is a tangled knot that refuses to untangle itself? Fear not—visualization is the wizard’s wand that turns chaos into crystal‑clear insight.

Picture Me, The Real MVP

- Charts & graphs: Think of them as your friendly sidekicks, pointing out hidden patterns that raw numbers would hide.

- Interactive visuals: Zoom, hover, and even dabble—let the data do the talking.

Team Collaboration Made Fun

When everyone can see the same visual beat, ideas pop like popcorn—the team instantly starts discussing, discovering, and celebrating together.

Why It Matters

With data visualized, you quickly spot trends, spot outliers, and narrate stories that your colleagues will actually get. And because everyone’s on the same page, the brainstorm sessions become productive and a bit more exciting.

7. Set Up Data Security Protocols

Keep Your Data Safe: A Quick Guide to Protecting Your Pipeline

Why It’s Worth the Effort

Imagine your data as a valuable diary. Encryption seals it up, access control decides who gets the key, and a policy is the rulebook that keeps everyone honest. Without these safeguards, your private information can become a public nuisance—either it’s hacked or accidentally leaked.

Step‑by‑Step Checklist

- Encrypt Everywhere – Turn every file and transmission into a locked box. Trust the modern crypto standards, not your old “scrambled data” trick.

- Fine‑Tune Access Rights – Think of access control as a do‑not‑enter list. Only the people (and systems) that truly need the data get the keys.

- Set Up a Policy – Draft a single, clear rulebook that covers:

- Who can read, write, and modify data.

- Where the data should live (cloud, on‑prem, hybrid).

- What happens if someone spills a data bomb.

- Audit & Remediate – Regularly check the logs, and if you spot a bleed, patch it before it turns into a leak.

- Educate Your Team – A well‑trained crew can spot suspicious activities faster than a cyber‑threat can spread.

What Happens If You’re Unprepared?

Think of negligence as a leaky faucet in a kitchen—every drop of misstep can seep out and eventually flood the whole house. A single unsecured endpoint, a misconfigured pipeline, or an outdated policy can leave your data open to malicious actors or accidental misuse.

Bottom Line

Data security is like the shield on a knight’s armor: it’s your most reliable defense. By encrypting, locking access, setting policies, and staying vigilant, you keep your pipeline impenetrable and your data safe from both villains and blunders.

8. Monitor Performance

Keeping Your Data Pipeline Shipshape

Running a data pipeline isn’t just about moving numbers from point A to point B. If you want it to keep humming along without hitting a snag, you’ve got to keep a sharp eye on its performance. Think of it as checking the pulse of your data engine: you’ll spot trouble before it turns into a full‑blown crisis and learn how to make every mile faster.

What to Watch For

- Outages: When the pipeline suddenly goes silent—like a router that forgets to turn on—stop right there and investigate.

- Delays: Even a few extra seconds can pile up on a large dataset; treat it like a traffic jam that could throw off your schedule.

- Performance hiccups: Any glitch that slows things down—think of it as a sneeze in the middle of a marathon—needs a speedy reminder to fix.

Quick Fix Checklist

- Check the logs: Scrutinize them for error spikes—those are your early warning lights.

- Audit your nodes: Is one machine getting overworked? Balance the load, or swap out the rusty gear.

- Validate inputs: Out-of‑range values can cause chaos; make sure every datum is where it should be.

- Update dependencies: Old libraries can behave like broken glue; upgrade to keep everything tight.

Pro Tips for a Smooth Journey

1. Automate monitoring: Set up alerts that shout when something goes wrong—no manual eyeballing required.

2. Keep a data hygiene routine: Clean, trim, and standardize your inputs regularly. A tidy dataset is a happy dataset.

3. Embrace latency tolerance: Not every delay is catastrophic; detect and absorb minor lag before it multiplies.

In the end, proactive monitoring turns your pipeline from a “broken pipe” into a “well-oiled machine.” Give it the love and attention it deserves, and it will run like a dream—minus the scary hiccups. Happy data‑driving!

9. Adapt To Change

Keeping Your Data Pipeline Nimble

Think of your data pipeline as that ultra‑responsive teen who can pivot fast when trends shift, regulatory news hits, or a new data source drops into the room. The real trick is making sure it can do that without a sob saga.

Why Flexibility Matters

- New data sources: when a partner starts streaming logs in a different format, you need a plan B, not a ha‑ha.

- Regulation roller‑coaster: data compliance rules are like the weather—sometimes scorching, sometimes snow‑topped, so your pipeline should roll with the changes.

- Market wildcards: market trends can flip overnight; your flow must be ready to change lanes.

Swift & Efficient Adaptation

Below are quick wins that keep the results accurate and irritation at bay:

- Build modular components that swap out like Lego blocks.

- Automate schema detection so you’re not waiting for manual updates.

- Test in a sandbox before swapping into production—so the live system doesn’t feel like a live‑action movie.

- Set up alerts that scream “new source.”

In short, a flexible pipeline is your backstage pass to staying ahead of the curve—gives you the freedom to roll out changes on the fly while keeping your data rock‑steady.

10. Continually Optimize

Keeping Your Data Pipeline Fresh and Lean

Why it matters

Think of your data pipeline as a smoothie. The more you refuel and tweak the recipe, the fresher and more nutritious it stays. A pipeline that runs at top speed without spilling a single byte means happier analysts and happier end‑users.

Spotting the “sour” spots

- Data collection clog‑ups: Are repeated requests making the system wait like a traffic jam by morning breakfast?

- Quality check hiccups: Do you shake shaking that data to see if leaks exist? If not, tidy that afterwards.

- Cold sweat DUs: If the logs are as confusing as your old Netflix password roll‑ups, it’s time for a cleanup.

Bottom‑line improvement tactics

- Trim the funnel: Funnel may be a nickname! Remove redundant ingestion steps, keep only the ones that actually bring you something useful.

- Speed‑test checkpoints: Put a tiny stopwatch in your ETL stages to catch slow parts. Even the tiniest lag can add up to hours of wasted compute.

- Automate the sanity checks: Let your pipeline ask the question: “Does the data fit the shape?” Add rules that auto‑flag anomalies. No more guessing games!

- Re‑watch the playbacks: Inspect logs or IAM queries as if they were a movie. The more you understand the log hearts, the less superfluous overhead the pipeline produces.

The “Live” Edition

Optimization is a stroll, not a sprint. Schedule a quick review every few months—think of it as a routine check‑up for your data system. If you catch the issues early, you’ll avoid that painful PITA of data delays.

Keep it fun, keep it efficient

By constantly fine‑tuning the data pipeline, you’re not just reducing bottlenecks—you’re saving time, energy, and a whole lot of headaches. After all, a smooth, well‑rolled pipeline is a happy pipeline. Happy data, happy you!

Final Thoughts

Supercharge Your Data Pipeline

Picture your pipeline as a shiny, turbo‑charged express train. To keep it running smoothly, you need to tweak a few gears, and I’m here to show you how.

1. Automate Like a Boss

- Schedule everything—give your jobs a routine so they don’t need manual micromanagement.

- Use cron jobs or cloud‑native schedulers to keep the rhythm humming.

- Set up alerts for failures; a quick nudge is better than a silent jam.

2. Cloud‑Power You

- Opt for scalable services—think auto‑scaling or serverless functions.

- Leverage cloud storage for durability and low‑cost retrieval.

- Keep cost in check with inventory snapshots and spot instances.

3. Aggregation = Happiness

- Combine logs, metrics, and raw data into a single data lake.

- Apply partitioning and columnar formats to speed up reads.

- Use engines like Apache Spark or Presto for on‑the‑fly queries.

4. Keep It Clean—No Rubber Stamps Allowed

- Run deduplication periodically.

- Validate formats (dates, IDs) before they flood downstream.

- Throw out or archive stale data; clutter kills performance.

5. Quality = Trust

- Set up data quality dashboards to flag anomalies.

- Implement reconciliation checks against source systems.

- Promptly investigate any drop in data reliability.

6. Visualize the Magic

- Create dashboards that show end‑to‑end flow.

- Use stunning charts for latency, throughput, and error rates.

- Let stakeholders peek into the pipeline like a backstage pass.

7. Security Layers—Like a Fort Knox

- Encrypt data at rest and in transit with best‑practice keys.

- Apply role‑based access control—only the right people get the right data.

- Regularly audit logs and tweak permissions as policies evolve.

8. Performance Surveillance

- Track latency and resource usage across all stages.

- Optimize bottlenecks with targeted scaling or code refactoring.

- Use A/B testing to compare improvements before full deployment.

Final Thought: Flexibility Is Your Secret Weapon

Data pipelines aren’t one‑size‑fits‑all. As market demands shift, tweak your architecture—add a new data source, swap storage, or adjust throttling speed. The key? Stay nimble, celebrate small wins, and keep your data flowing like a well‑tuned highway.