PCI-X Unpacked: From Basics to Cutting‑Edge Features

Unpacking PCI-X: What It Is, Why It Matters, and the Two Major Versions

Ever wondered what PCI-X stands for and why it’s still a big deal? Let’s break it down in plain talk—no jargon, just the facts you need, wrapped in a pinch of humor.\n

What Exactly Is PCI-X?

PCI-X is short for Peripheral Component Interconnect – eXtended. It’s basically a fancy cousin of the older PCI standard, designed to slingshot more speed and bandwidth into computer systems, especially servers and high‑performance machines. Think of it as the high‑speed highway that lets data travel faster between your computer’s motherboard and its expansion cards.

History & Motivation: Why the Upgrade Happened

Back in the early 2000s, everyone was craving faster data rates, until someone stared at the PCI specs and thought: “Hey, we could push this to the next level!” PCI-X was born out of two main motives:

- Speed: Standard PCI maxed out at 133 MHz, leaving serious performance gaps.

- Scaling: Physical limitations grew increasingly blurred as new hardware pushed for more bandwidth.

So, to keep up with booming memory speeds, storage, and networking, the industry rolled out a new, extended spec. Boom—more bandwidth, more power control, and better reliability for all those data‑hungry systems.

Types of PCI-X: The Two Main Standards

While the idea behind PCI-X was simple, it boiled down to two concrete releases that actually hit the market.

PCI‑X 1.0

This initial version launch came in 2002. It bumped the data rate from 133 MHz up to 467 MHz and pushed the bus width from 32 bits to 64 bits. The consequence? A ~12× jump in raw throughput. It also introduced Bus Request and Bus Grant signals to improve device coordination on the bus.

PCI‑X 2.0

Released two years later, PCI‑X 2.0 was all about smoother rides. While the clock speed stayed at 467 MHz, the spec added support for full PCI Express consideration, allowing integration in servers that also needed PCI‑Express slots. It refined power management to make data transfer more efficient and also added better error‑checking protocols.

Why Should You Care?

If you’re into server building, virtualization, or just hooking up high‑speed storage, PCI‑X gives you:

- Greater bandwidth: For transfer‑heavy workloads.

- Improved reliability: Less bus contention means fewer crashes.

- Better power‑management: Helps keep your system cooler.

And it’s not just for the past—it can still appear in today’s legacy systems and certain boards that still run servers. So, next time someone asks about PCI‑X, you’ll have a quick, witty answer—plus a few laughs.

PCI X Definition

What exactly is PCI-X?

PCI-X (Peripheral Component Interconnect‑Extended) is a beefed‑up version of the classic PCI standard. Think of it as the “long‑horned cousin” that’s faster, smaller, and surprisingly handy for servers and workstations that need a 32‑bit or even a 64‑bit data bus.

Why do we need it?

- Server workloads demand huge bandwidth—PCI-X delivers it with a turbo‑charged clock.

- Standard PCI tops out at about 33 MHz; PCI‑X can go up to 133 MHz (and even 533 MHz with PCI‑X 2.0).

- It keeps the electrical profile similar to legacy PCI, so most existing cards still work.

Key Specs of PCI‑X 2.0

- Speed bump: 533 MHz clock with reduced voltage swings.

- Connector options: Full support for 32‑bit and 64‑bit slots.

- Mini‑meat: A 16‑bit variant for embedded gadgets.

How Did It Fare in the Modern World?

As technology progressed, the quirky, parallel‑port design of PCI‑X fell out of favor. Enter PCI Express (PCIe)—a brand‑new serial lane architecture that’s sleek, scalable, and much easier to squeeze into tiny spaces.

Why PCIe Replaced PCI‑X

- Simpler ATOM: It’s built around narrow serial lanes rather than multiple parallel links.

- Speed wins: Modern PCIe lanes can run at high frequencies and carry more data per tick.

- Future‑proof: PCIe’s flexible lane count means it can grow as needs expand.

So while PCI‑X served its era with gusto, today the fast‑serial PCIe lanes have taken the throne, offering a clean, high‑speed pathway that keeps servers and devices humming smoothly.

What is History?

Background and motivation

What’s Up With PCI and Those Annoying Retry Cycles?

Picture this: you’re sending data down the PCI bus and the target is not quite ready to reply. Instead of handing the ball back to another device, the bus gets stuck waiting around for a whole round‑trip. That’s the infamous retry‑cycle scenario.

Why the Bus Gets Stuck

PCI’s old‑school design was a bit, well, back‑to‑back. When a master sends a request but the target can’t answer immediately, the master must keep telling everyone else to hold off until the target finally chimes in. The result? The entire bus stops dancing, and no other components get a turn.

Split Response—The Game Changer

- Splits the ride: The master sends its request and then steps away from the bus, giving the crowd a chance to keep moving.

- Target hits the tab: When it’s ready, the target drops the reply, completing the transaction in a separate beat.

- No more retries: Because the master isn’t stuck in the middle, the bus stays lean and efficient.

Enter PCI‑X: Speeding Things Up

Back when PCI was limited to a 66 MHz clock, you probably had to toy around with a PLL to keep those I/O signals from going haywire. That clock speed was like a scooter in a traffic jam.

PCI‑X cut that speed in half—yup, 133 MHz! With a faster clock:

- Timing sharpens up: Data gets set up faster, leaving less chance for hiccups.

- A smoother bus: The bus can handle more traffic without that dreaded “stuck” feeling.

Bottom Line

PCI was clever for its time, but those retry cycles slowed everything down. Split responses and a faster clock in PCI‑X finally gave the bus the freedom to breathe, improving performance and keeping your system from stalling up and down the line.

What are the types?

PCI-X 1.0

Remember PC‑64? The 1998 PCI Power-Up

In the late 90s the tech giants Compaq, IBM, and HP teamed up to patch a pain point that had been gnawing at computer builders: the old, 32‑bit PCI bus was choking on the next‑gen devices. Think 10‑Gigabit Ethernet, Fibre Channel, Ultra‑3 SCSI – the stuff that would make a server run like a racehorse. Their solution? The PCI‑64 standard.

Why it mattered

- It codified the proprietary extensions that had been floating around in server boards.

- It fixed the shortcomings of PCI, giving developers a clear roadmap for better bandwidth.

- It made it easy for processors to talk to each other in clusters, which was a game‑changer for data‑center performance.

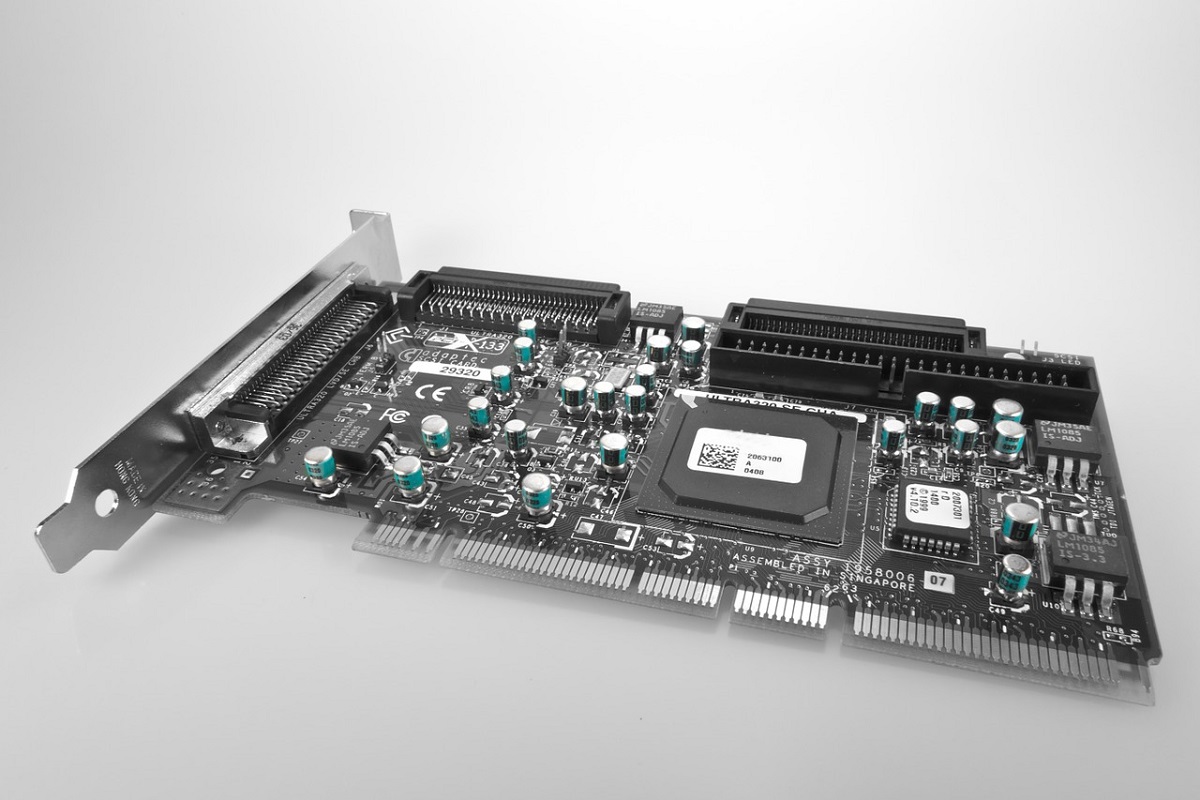

First‑hand success

Just a year after the spec hit the lanes, you could find the first PCI‑64 goodies on shelves: the Adaptec AHA‑3950U2B, a dual Ultra‑2 Wide SCSI controller that turned any old server into a powerhouse. And on the box? They simply called it “64‑bit ready PCI”, a subtle nod that it was ready for whatever the future threw at it.

In a nutshell

PCI‑64 was the tech community’s “future‑proof” buzzword that, by 1998, allowed high‑speed peripherals and clustered processors to share the same sweet, sweet bus. It was a little signpost that said, “Hey, we’re ready for the next big thing.” And ready we were.

PCI-X 2.0

PCI‑X 2.0: The 2003 “What’s Up, Speed?” Edition

Speed‑Talk

In 2003 the Standard Industrial Group officially put the PCI‑X‑2.0 on the books. It rolled out two fresh variants:

- 266‑MHz – Roughly 2,132 MB/s of pure data flow.

- 533‑MHz – Doubling that to about 4,266 MB/s.

Think of it as a speed‑up summer road trip, only the road was a circuit board.

Reliability, But Make It Fancy

PCI‑X 2.0 didn’t just crank up the numbers; it added some “revamp” tricks to keep the bus alive:

- Protocol tweaks that make the data path less finicky.

- Built‑in error‑correcting codes – because nobody wants to trade a packet in a second‑hand store.

In short, it’s like swapping out a low‑quality coffee machine for one that auto‑remembers your latte preference.

Space‑Saving 16‑Bit Ports

One of the classic pain points was the 184‑pin connector. The fix? 16‑bit ports designed for tight‑knit devices. The idea: squeeze data into a smaller footprint without dropping a byte.

Peer‑to‑Peer (P2P) – No CPU Drama

While PCI‑Express takes the spotlight, PCI‑X 2.0 introduced “PtP function devices” that could talk straight to each other on the bus. The result? No CPU bottleneck, no bus controller drama – just smooth, direct conversations.

Why It Never Took Off

Despite its neat tricks, the ecosystem never gave PCI‑X 2.0 the spotlight it deserved. The main reason is simple: hardware vendors opted to jump straight to PCI Express.

So, while PCI‑X 2.0 was a solid project in 2003, the tech world decided it was better to ride on the newer, forward‑leaning express train.